Brains Are Not Algorithmic

Computability, Algorithms, and the Penrose-Lucas-Gödel argument.

In the previous post, Brains Are Nothing Like Computers, I began explaining why I became skeptical of computationalism, a notion I’d initially accepted. I defined how I divide strong from weak computationalism and differentiate emulation (functional or algorithmic approaches), simulation (of the physics of the brain), and replication (of the structure of the brain) as distinct “flavors” of it.

For dessert I visited imaginary rooms envisioned by John Searle and Frank Jackson. Recently I’ve been reminded of another well-known picture for pondering, David Chalmers’s philosopher’s zombies (called “p-zeds” by SF author

in Quantum Night). Be warned. P-Zeds may shamble into this post.1I left off with Roger Penrose, Stuart Hameroff, and someone named Lucas.

The Lucas-Penrose Argument

I should begin with the caveat that the general consensus among experts, which here includes philosophers, mathematicians, and computer scientists, is that the following argument does not work for them. Their counterarguments are strong enough to warrant skepticism, but I find something compelling in the basic argument.

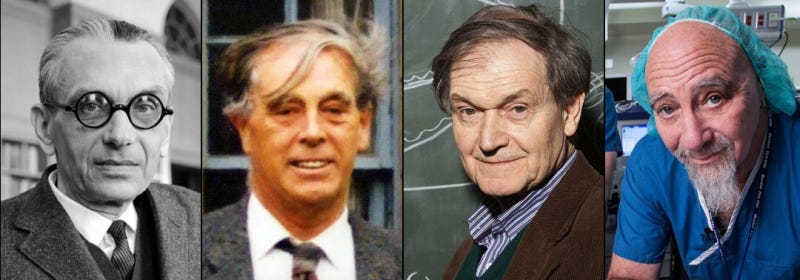

The seeds were planted by British philosopher John Lucas (1929-2020). In his paper “Minds, Machines and Godel” (1959), Lucas bases an argument favoring free will on Gödel’s Incompleteness Theorems. In it, he asserts that no algorithm can accurately represent a mathematician. And by extension, or anyone.2

His paper begins:

Gödel's theorem seems to me to prove that Mechanism is false, that is, that minds cannot be explained as machines. So also has it seemed to many other people: almost every mathematical logician I have put the matter to has confessed to similar thoughts, but has felt reluctant to commit himself definitely until he could see the whole argument set out, with all objections fully stated and properly met. This I attempt to do.

At the end, he concludes:

Our argument has set no limits to scientific enquiry: it will still be possible to investigate the working of the brain. It will still be possible to produce mechanical models of the mind. Only, now we can see that no mechanical model will be completely adequate, nor any explanations in purely mechanist terms. We can produce models and explanations, and they will be illuminating: but, however far they go, there will always remain more to be said. There is no arbitrary bound to scientific enquiry: but no scientific enquiry can ever exhaust the infinite variety of the human mind.

I italicized the key phrase. No mechanical model — no computation — can ever be completely adequate. As I’ll explore down the road, we can compute some specific thing to an arbitrary degree of accuracy, but Gödel always limits us.3

In his book “The Emperor’s New Mind” (1989), Roger Penrose extends the argument in considerable detail from a foundation of theoretical physics. He cites the Lucas paper in the References, but — based on the Index — doesn’t mention him in the text.4

I do like that Penrose makes it clear from the beginning he is speculating:

“I should make clear that my point of view is an unconventional one among physicists and is consequently one which is unlikely to be adopted, at present, by computer scientists or physiologists.” ~from the Prologue

And what has come to be known as the Penrose-Lucas argument has indeed been largely rejected. At the least, I think it gives some credence to notion human brains are not algorithmic machines even in the context of the physicalist assertion that brains necessarily are machines.

Exploring this requires understanding what Gödel’s Incompleteness Theorems actually say, how they might apply to brains, and what we mean by an “algorithmic machine” as opposed to the broader category of all machines.

In what follows, keep in mind that the Lucas-Penrose argument against mechanism is distinct from the Penrose-Hameroff proposal about quantum effects. The brain may well be non-Gödelian in virtue of being an analog machine — no quantum needed.

Gödel’s Incompleteness Theorems

In 1931, mathematician Kurt Gödel upended mathematics with a paper demonstrating unexpected limits in math. In particular, Gödel showed it was impossible for any given mathematical system to be both consistent and complete. Worse, his second theorem showed that no mathematical system can prove its own consistency.

For a math system to be consistent is for it to never contradict itself. A consistent mathematics can never prove that 1=2. Which is not just a good thing, but a necessary thing in math. We require consistent mathematics.

For a math system to be complete is for it to be able to express all possible true statements in the system. Our hope was that math was both consistent and capable of enumerating all true statements. That would be ideal. Gödel forever destroyed that hope. The best we get is (unprovable!) consistency and an infinite number of truths we can “see” (intuit) are probably correct but never prove.5

Casually stated, we can never prove all things we “know” are true.

This speaks to our life experience of things we “feel” are true but cannot prove.6 As beguiling and compelling as that may be, it’s more metaphorical than actual. Yet many do apply it to non-mathematical systems, including life itself. As a metaphor, that’s fine, but Gödel’s theorems are strictly about mathematical systems.

Looked at another way, though, if human consciousness is not consistent (not mathematical) — which prima facie seems the case given our ability to be conflicted and self-contrary on issues (for example, love/hate relationships) — then perhaps we do have intuitive access to truths impossible to prove factually.7

In contrast, strong computationalism asserts that the brain is an algorithmic machine, so Gödel would apply. Weak computationalism, especially in the form of replication, has more latitude to deny Gödel. I’ll explore that in future posts, but let’s move on to what we mean by algorithmic machine.

Algorithms and Turing Machines

An algorithm is a recipe for converting input numbers to output numbers. By extension, an algorithm is a description of a process — for instance, instructions on how to drive somewhere. In digital computers, though, algorithms only “crunch numbers”, and here I am concerned only with computer algorithms.

We can view an algorithm as a transformation of inputs to outputs. For example, a division algorithm turns the inputs “10” and “2” into the output “5”. The order of inputs is significant. If they were “2” and “10”, the correct output would be “0.2”. Note that, because the outputs are fixed — because algorithms are deterministic — we can in principle implement any algorithm as a lookup table indexed by the inputs.

Alternately, for any (computer) algorithm, there exists a Turing Machine (TM) — a computer function — that implements that algorithm. Equivalently, every algorithm can be expressed in what’s called the Lambda Calculus — the purely mathematical expression of an algorithm.

This gives us multiple ways of looking at algorithms: a Turing Machine, a set of Lambda Calculus statements, a lookup table, “code” (as opposed to “data”), an “algorithm”, a “program”, or an “app”. A fundamental theorem in computer science, the Church-Turing thesis, says these are all different forms of the same thing. One very important point: these all involve discrete symbol processing. Which is a fancy phrase for number (discrete symbols) crunching (processing).

Crucially, the computers I’m talking about here are strictly mathematical machines. All they do — all they can do — is move numbers around and perform simple math operations on them (adding, subtracting, multiplying, dividing). These computers — digital computers — don’t do anything a calculator can’t do (or an abacus, for that matter).8

So, algorithms are math. Which means Gödel applies.

This is what Penrose spends a dense book exploring. His conclusion is that conventional computers can never hope to be conscious the same way our brains are. He posits that quantum computers, or quantum effects, might be necessary for consciousness.

In contrast, strong computationalism asserts there is indeed a TM — an algorithm — that implements human consciousness. As such, because Church-Turing, this algorithm can run on any conventional computer. Mind uploading and duplication come included in this package.

The Penrose-Hameroff Proposal

Stuart Hameroff is an anesthesiologist who wondered for years about the mechanisms of unconscious patients under anesthesia. He controversially contended that it involved quantum effects in the tubulin molecules in the microtubules in the cells of the brain. Hameroff contacted Penrose after reading “The Emperor’s New Mind” to suggest this as the mechanism behind the quantum nature of the brain.

Robert Sawyer has used this idea in several of his science fiction novels (all highly recommended for fans of near-future hard SF). In Quantum Night (2016), his protagonists discover the quantum mechanism responsible for consciousness, and they find there are three quantum states, which they label “Q1”, “Q2” and “Q3” depending on how many of three possible electrons are in quantum superposition. There is also a state with no superposition — total unconsciousness as in coma or anesthetic.

As it turns out, these three states occur in the human population with a 4:2:1 ratio, and the states correspond respectively to philosopher’s zombies (Q1: not really conscious), psychopaths (Q2: conscious but without conscience), and “quicks” (Q3: fully conscious with a conscience). And quicks are outnumbered one to six.

A science fiction fancy, but if anything like it is true, it would explain a great deal about human nature. I confess the idea held deep appeal for me. Quantum Night was the first Sawyer I read, and it made me an instant fan.9

Science fiction aside, I find the Lucus-Penrose argument against mechanism strong enough to warrant serious consideration — strong enough to make me skeptical of most forms of computationalism. If the argument is true, emulation is ruled out, and simulation is at least questionable. Replication, though, seems to me to dodge the Gödelian bullet.

The Penrose-Hameroff proposal seems on shakier ground. For one, the analog nature of the brain may be sufficient to exclude Gödel (as would replication). For another, so far there is no proposal for how quantum behavior in the microtubules affects consciousness. That said, Mother Nature uses everything at her disposal, and I can easily see quantum effects being in her toolkit.

We may already have evidence of it. It’s believed that photosynthesis leverages quantum effects. Keep in mind, too, that chemistry is quantum. We’re just so used to chemistry that we don’t think of it that way. It wouldn’t surprise me at all that Mom used quantum effects to accomplish her most astonishing and complicated creation.

Next time, I’ll get into computer simulations and the “digital dualism” that creates a divide between the physical world and the abstract world of discrete numbers.

Until then…

Quantum Night was my introduction to Sawyer, and I became an instant fan for several reasons, some of which, as you’ll see, apply directly to this post. And it’s Sawyer who coined “philosopher’s zombies” because said zombies are anything but philosophical.

On the premise that mathematicians are people, too.

So does mathematical chaos, but that was unknown to Gödel.

I mentioned last time I read TENM back in the 1990s. Looking at the Table of Contents, I’m eager to reread it from the perspective of all I’ve learned in 30 years.

Fortunately, a larger math system can prove the consistency of a smaller one, so we can have confidence in the smaller one. But then we need an even larger system to prove the consistency of the large one, so it’s turtles all the way down. Or up, in this case.

As a simple example, how can you prove you love someone? You can demonstrate it, but can you prove it beyond doubt?

Like love and faith.

In contrast, there are analog computers that use magnitudes rather than numbers. I’ll talk more about those in a future post.

And because I find his writing style delightful. We seem to share many of the same cultural reference points.

I've recently argued that scientific defenses of free will are pseudoscientific. What I wonder is whether any testable predictions about the mind have been derived from the theories that you discuss. If you can't test them, how can you assess whether they're true or not? And what use are they for actually understanding how the brain works? https://open.substack.com/pub/eclecticinquiries/p/the-pseudoscience-of-free-will?r=4952v2&utm_campaign=post&utm_medium=web

Seriously, Wyrd. Any person who can figure out all this can surely come up with an easy formula for simple choco-mel.